Ongoing research

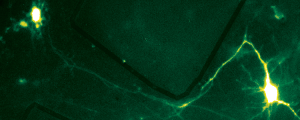

How do distributed neuronal populations end-up encoding shared information or working together? Is it a consequence of an actor-critic architecture, or a result of self-organization across replicas of a canonical circuit? These questions are ultimately linked to the problems of catastrophic forgetting and transfer learning in the brain: how do neuronal networks, that are continuously being driven by new stimuli, learn new information while maintaining existing one? And how is information learned by a network, transferred to another one? Answering these questions is also of extreme importance for the aging brain and mental health, since network-wide dysfunctions signal the onset of several neurodegenerative pathologies, and are an interesting target for therapeutic interventions.

My interest in these questions resides not only in their relevance for neuroscience, but also for machine learning and complexity science. Transfer learning and catastrophic forgetting are unsolved issues in deep learning, while in physics, distributed coding is ultimately linked to the presence of ”criticality” in the brain, to non-equilibrium phase-transitions in complex networks and self-organization. I will take approach that is deeply rooted in physics, one that integrates principles of nonequilibrium statistical physics, dynamical systems, and network science within the constraints of neuroscience.

- Causal inference through control theory and patterned stimulation during behavior

The study of causality in neuroscience is deeply linked to the principles of sufficiency and necessity. Extreme emphasis is put on identifying and isolating a single source of a given behavior or phenomena, to the intervene to avoid such behavior. Instead, I do believe that we need to focus on an information-centric definition of causality, i.e., predictive causality; whose predictions can then be proven by combining modeling work and perturbative experiments.

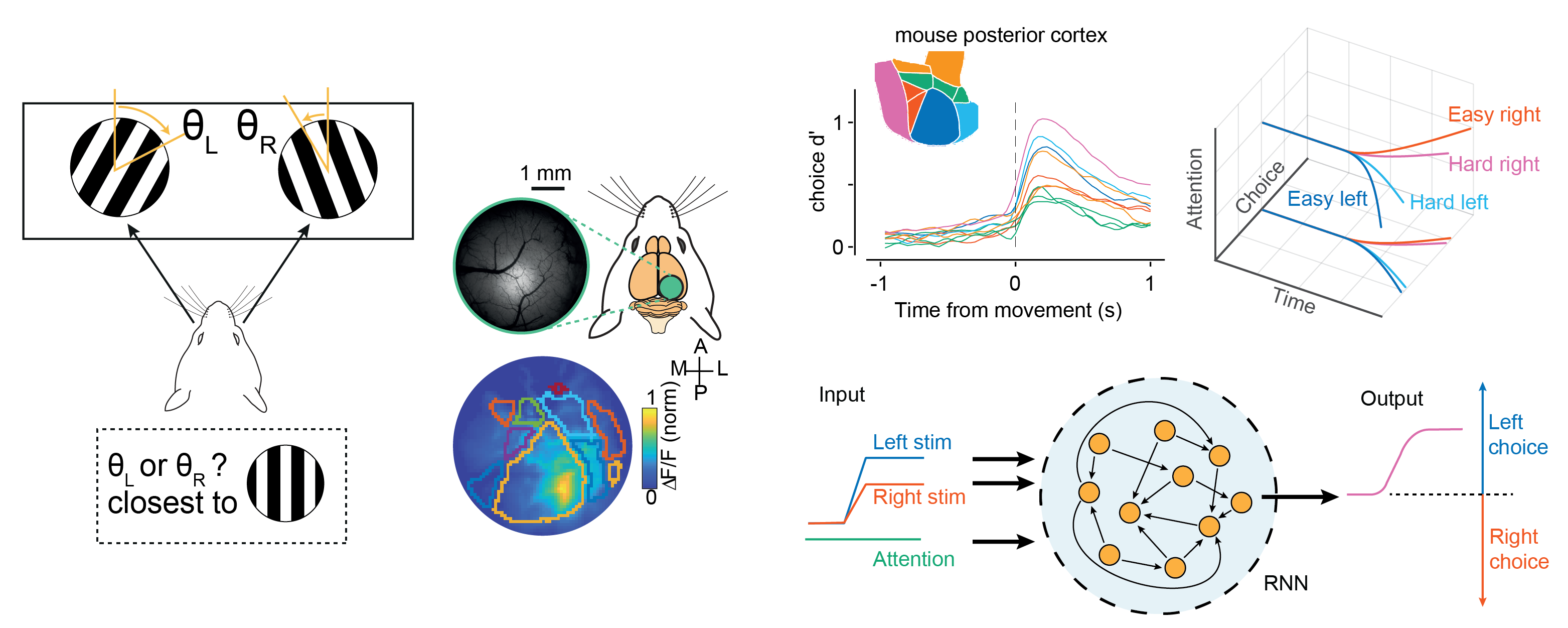

- Principles of distributed coding during decision-making

We now have ample evidence that computations in the cortex are more distributed than previously thought, specially during highly-demanding cognitive tasks. However, whether a distributed neural population is really required for the computations, or whether it just encodes and mirrors information computed somewhere else, is currently unknown. These ground-breaking studies have benefited from the latest imaging and recording techniques, but concurrent recordings across multiple brain areas do not yet exist, and are instead stitched across multiple sessions.

- Context-based and transfer learning

How are networks engaged in distributed computations integrate contextual information? Are computations routed through different smaller modules? Or are they low-dimensional representations of a larger activity mode? Seminal work integrating modeling and experiments has revealed key insights on the low-dimensional representations of these computations [Mante 2013], but unifying principles are yet to be revealed.